We think it’s appropriate to kick off the new year with an examination of what the future holds for user experience and product development.

To stay ahead of the curve when it comes to user research and UX design, we must keep in mind the new technologies that are currently under development and could influence the fundamental ways in which people interact with the products we design and develop. We’ve seen the advent of such disruptive technologies repeatedly throughout time, including automobiles, telecommunications—radio, telephones, and television—and the personal computer. In this column, we’ll describe several new technologies that have the potential to change how we interact with technology and the world. Some of these technologies may be many years away from maturity, but they are definitely going to have massive impact in years to come.

Brain-Computer Interface

The Brain-Computer Interface (BCI) has been under development since the 1970s. Its goal is to enable people to provide input and control systems using thought alone. To accomplish this, researchers like Philip Kennedy and John Donaghue have been studying ways to measure brain activity and convert the data into input for computer systems. For example, over the years, different applications have had success with restoring vision to blind patients, control of prosthetic limbs, control of robotics, speech generation, gaming, and cognitive imaging, among others.

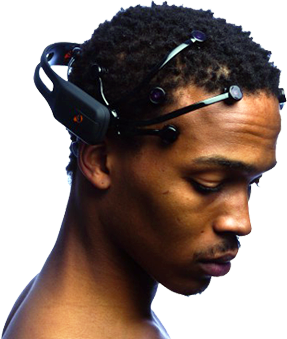

Recent developments have attempted to make BCIs more flexible and less invasive, progressing from neuronal implants in the brain to headsets using EEG (electroencephalography) technology like that shown in Figure 1. There are still many hurdles to overcome before this technology can truly reach its full potential, but it’s easy to see how BCIs could completely transform the way we interact with machines.

Figure 1—EEG headset

Instead of providing input using error-prone touchscreens that force us to make awkward gestures with our hands or QWERTY keyboards that were deliberately designed to slow our rate of input, we could use our thoughts to directly provide input to computing systems. Rather than typing and using a mouse, which can result in our developing carpal tunnel syndrome or other repetitive stress injuries, we could experience the ease of inputting text and commands directly. We would no longer have to fumble with tiny mobile device user interfaces that offer limited interaction models.

Instead, we would be able to do just about anything we could imagine from wherever we happen to be, through completely new modes of interaction. We could enjoy complete gaming experiences on our mobile phones and notebook computers, without any need for special controllers. We’d be able to input destinations into our cars’ navigation systems or select music while driving without taking our hands from the steering wheel or our eyes from the road. We might even be able to communicate soundlessly and create digital art that is a direct expression of our imaginations.

Of course, with such dramatic change would also come a complete shift in thinking when it comes to product design. For example, it would be essential to address design challenges like accidental activation. How much of our input should be automatic and how much should be intentional—and how can we distinguish between the two? Given all the chatter in our heads, how can we organize our thoughts into coherent user interactions? When people are communicating through their thoughts, how can we ensure that their fleeting thoughts don’t become unintended messages? What are the safety implications of operating heavy machinery like driving a car?

Although BCIs can free up the hands, does that mean people would be doing nothing but thinking? If people were controlling games with their minds, what would they be doing with their hands? Should their hands be doing nothing, or should they use them in some way to enhance the feeling of realism—like pretending to hold a gun or a guitar? How many things can a person do simultaneously? If a person is playing a complicated piano piece, could he also simultaneously edit the sheet music as he plays?

The possibilities for the applications of a Brain-Computer Interface are nearly endless, which could open up a whole new world of interaction design. It will be exciting to see what new interaction models emerge as this new technology matures.

Bionic Vision

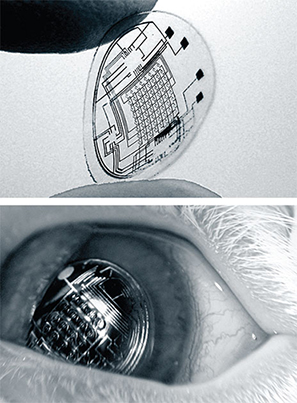

Typically, when people refer to wearable displays, they’re thinking of awkward glasses, goggles, or some other head-mounted display that provides a user with some kind of heads-up display. However, engineer Babak Parviz and his colleagues have envisioned something completely different. For several years, they have been working on a bionic contact lens, shown in Figure 2, that would enable the kind of augmented vision we’ve seen in science-fiction fantasies for decades. If Parviz succeeds, he would give us an entirely new way of interacting with technology. No longer would we be tied to our desks or need to lug around notebook computers. Nor would we be limited to using the small displays of portable devices like smartphones or tablets. This technology would free us from all such constraints and allow us to consume information in an entirely different way.

Figure 2—A bionic contact lens

With these future possibilities would come new user experience challenges. We must discover how this new display medium would alter the way in which people interact with technology. We might be, and likely would be, surprised to discover what people would want to do with bionic vision and what uses they would avoid. It seems obvious that people would want to quickly and easily access information about the world around them—for example, looking up bus and train schedules as they hustle down busy city streets. But we must learn things like whether people would want to watch movies in full IMAX resolution while sitting on a plane, traveling over long distances.

We must also discover the most effective way of presenting information that is easily consumable while people are interacting with the world. While having instant information available at all times sounds exciting, it’s entirely possible users might absolutely hate viewing an augmented-reality information display incessantly, while trying to live their lives.

There would be challenges to overcome. Would people cling to traditional displays for whatever reason? Would people experience motion sickness from having information appear on a static display that doesn’t move with their environment as they turn their heads? How can we avoid safety issues that might arise from people being distracted from the real world as they walk down a street or drive their car? If people were viewing displays that only they could see, how would they be able to share what they’re seeing with other people? Answering such questions is essential to determining how people might actually put this new technology to use.

Affective Computing

Historically, people have perceived computer systems as aloof, emotionless, and insensitive entities—like HAL in the visionary 2001: A Space Odyssey. While researchers and engineers like John Donaghue, Philip Kennedy, and Babak Parviz are seeking to change the way we interact with machines, Rosalind Picard’s goal is to change the way machines interact with us, through the development of affective computing. Affective computing is an effort to instill machines with the ability to recognize and respond to emotions, simulating empathy. Rosalind Picard heads up the Affective Computing Research Group at the prestigious MIT Media Lab. As a discipline, affective computing relies upon groundbreaking research such as Paul Ekman’s work in involuntary microexpressions, Klaus Scherer’s work in vocal emotional expression, and nearly a decade of research in galvanic skin response, which is an extremely reliable method of measuring physiological excitation and, thus, an excellent means of determining the intensity of emotions users experience.

An affective computing system that combined these different means of affective measurement could respond to a user’s current emotional state—for example, safety systems could respond to fear. Other planned applications of affective computing include systems that display emotion, resulting in more realistic digital pets or representatives in virtual systems for customer service and technical support.

Affective computing could significantly alter our relationship with technology. What we currently treat as a tool, we may someday interact with like a friend. Different brand identities might have a different feel. At some point, interaction design might encompass the design of systems that have different personality types and responses that range from stoic to empathetic to humorous. This would open the door to a new frontier of hedonic design. A system that could sense the emotional state of a user might learn to recognize positive emotional states in response to certain content or methods of display. The system could then adjust its output accordingly, to maximize the enjoyment of the user. Gaming systems incorporating affective computing could adjust their difficulty in response to a user’s frustration. Music players could create playlists that conform to a user’s mood.

The implications of affective computing for user experience research are quite significant. By providing us with a means of reliably measuring a user’s emotional state, we could identify which product or user interface designs result in frustration or delight, allowing us to better support the design of a positive user experience.

As such affective computing capabilities develop, we must identify important user considerations. How much emotional response and empathy would be too much? Would users be comfortable with emotional responses from computers? How variable would different users’ emotional responses be to the same user experience? Are there certain personality types that are more popular than others? Do mood changes alter users’ preferences for interactions? Are emotional responses to design elements consistent, or do they change over time? How can software systems alter their design on the fly to maximize users’ enjoyment? Successful implementation of affective computing would require an understanding of all of these issues.

Conclusion

In this column, we’ve taken a peek at the future. We’ve discussed possibilities for the future, but we have yet to see what form these technologies might take or how their developers might bring them to market. We don’t yet know what such products’ capabilities might ultimately be. Also, we haven’t yet touched on the ethical and social implications of more intimately connecting man and machine.

Transformative technologies such as these may have far-reaching impacts on how we interact with each other and with machines, how we engage in our professions, as well as how we preserve our health and safety. Our global society must determine how to best implement these technologies in useful and responsible ways.

Prospects for these new technologies are very exciting, but also a little daunting—and even more so when we start to imagine a world in which all of them exist at once. Imagine riding down a mountain on a bike, with your contact-lens display showing you three different route options, affective computing algorithms recommending which one you would enjoy most, and selecting your route with your mind. Such scenarios speak to a future in product design and user experience that would be both challenging and thrilling. We’re excited to see what types of user experiences people’s imaginations might conceive of once these technologies remove some of the limitations that bound user experience today. Let us know what you would design with these capabilities. What can you imagine?